ACDESIGN

This project is a continuation of the research I conducted in my first semester, where I investigated the implications of generative AI in the creative industry. In my initial case study, I reviewed existing literature regarding the progression and dangers of AI, exploring the current attitude towards the advancement of generative AI’s abilities. I also conducted primary research via a questionnaire designed to collect data on the general public’s opinions on the subject. The aim of this case study was to predict the scope of generative AI in the future, to inform designers operating within the creative industry on how they could adapt their workflow and career to incorporate gen AI. The key finding from this case study was the discovery of a gap between human design, and gen AI’s current capabilities. Generative AI tools can only reproduce pre-existing content based on the datasets that they are given. As a result, nothing AI produces is truly original, and that is where human design supersedes it. This was further confirmed by the primary research, where the majority of the results from survey participants stated they preferred human content due to its originality and creativity.

To build on the previous case study, the second part of this project involves a more practical approach, where I can substantiate and confirm my previous findings and work towards providing a solution to incorporating generative AI into the creative industry. In this project I created a series of designs that were based on fictitious client briefs. For each brief, I created one design using traditional design methods, one with the assistance of AI (for generating assets or concepts) and one where the design was completely generated by AI tools. This work was then presented to the general public in the form of a survey, where they could choose their favourite designs to provide evidence of which workflow was typically preferred. It is also vital to consider other factors such as the time taken to make each design, and the quality of the content (symmetry, editability and sharpness). This project will help to ascertain which areas of the creative industry generative AI can help assist designers, and areas where it may even surpass human design.

I first explored the current limitations of generative AI models to help infer my process on creating each different type of content. To make the comparison between human-produced design and AI generated content fair, it was vital to understand the limitations of each tool, and ensure I was using the most up-to-date model. Feuerriegel et al. (2023) notes that AI has the ability to violate copyright laws as it is primarily trained on data of pre-existing works. This is worth considering while using these tools to ensure they are being used ethically and responsibly. To choose which generative AI model to use, I considered multiple articles. One popular tool is Midjourney, for its efficient output and high-quality image generation. However, as Ian MacLean (2024) explored, Midjourney (and other tools like it such as Stable Diffusion and Lexica) is more suited for abstract imagery rather than individual asset creation and design. Ultimately, I decided to use ChatGPT-4o for the AI generated portion of the content for this module due to its advanced and accurate text-rendering, as well as the ability to edit pre-existing images. Robison (2025) researches the advancements in the latest ChatGPT model further by analysing its new multimodal processing that allows for the tool to utilise text, audio and image simultaneously to maximise context and accuracy. Based on my research of current gen AI model capabilities, I believe that the outcome of this project will determine that content made by humans with the assistance of artificial intelligence will be the preferred designs, and the best workflow to employ in the creative industry going forward.

As the initial case study for this project explored, generative AI is beginning to dominate the creative industry. Donelli (2024) affirms that the presence of readily available tools such as ChatGPT lowers the skill floor for people to create high-quality designs and pieces of content. This over-saturation of the market consequently makes the prospect of standing out as a designer far more difficult. Despite this, there are downsides to solely using AI to generate content in its current state. Images produced by AI are created through a diffusion process whereby content is approximated based on data of pre-existing images related to the text-prompt the user provides. As a result, these images contain small inaccuracies, such as unwanted asymmetry and artifacts baked into the images. As well as this, the editability of AI produced images is minimal, since there are no layers available to edit specific parts of the image. Korotenko (2023) supports this view by highlighting ways in which synthetic images produced by AI can be spotted. A solution to this problem is to use generative AI sparingly, and as an assistive tool, such as using AI to find unique solutions to content spacing, or to produce a singular asset to use in a larger piece of work. This method makes the use of generative AI more viable as any designs made will retain editability and human judgement, but with greater efficiency and the ability to instantly workshop different ideas.

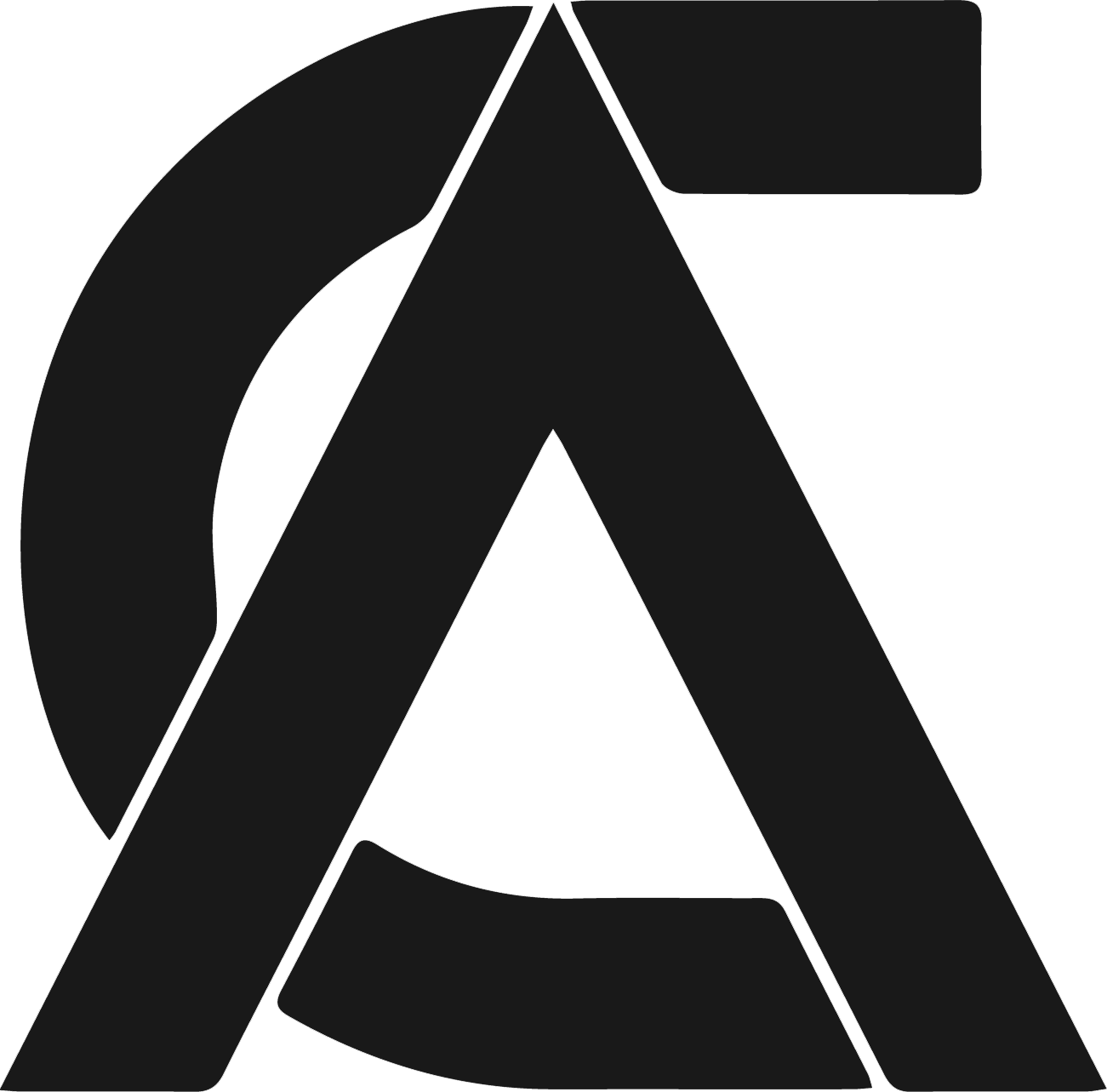

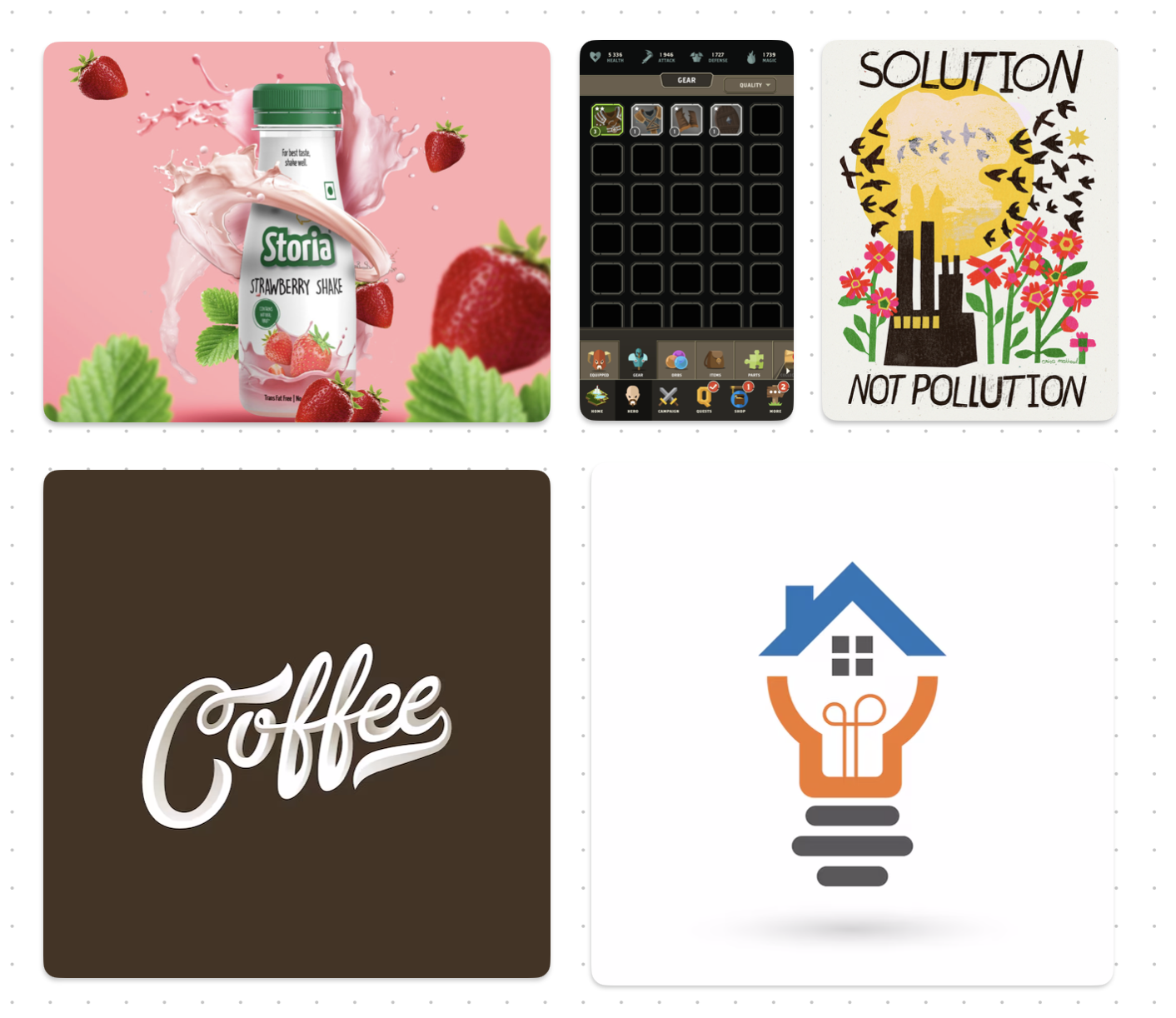

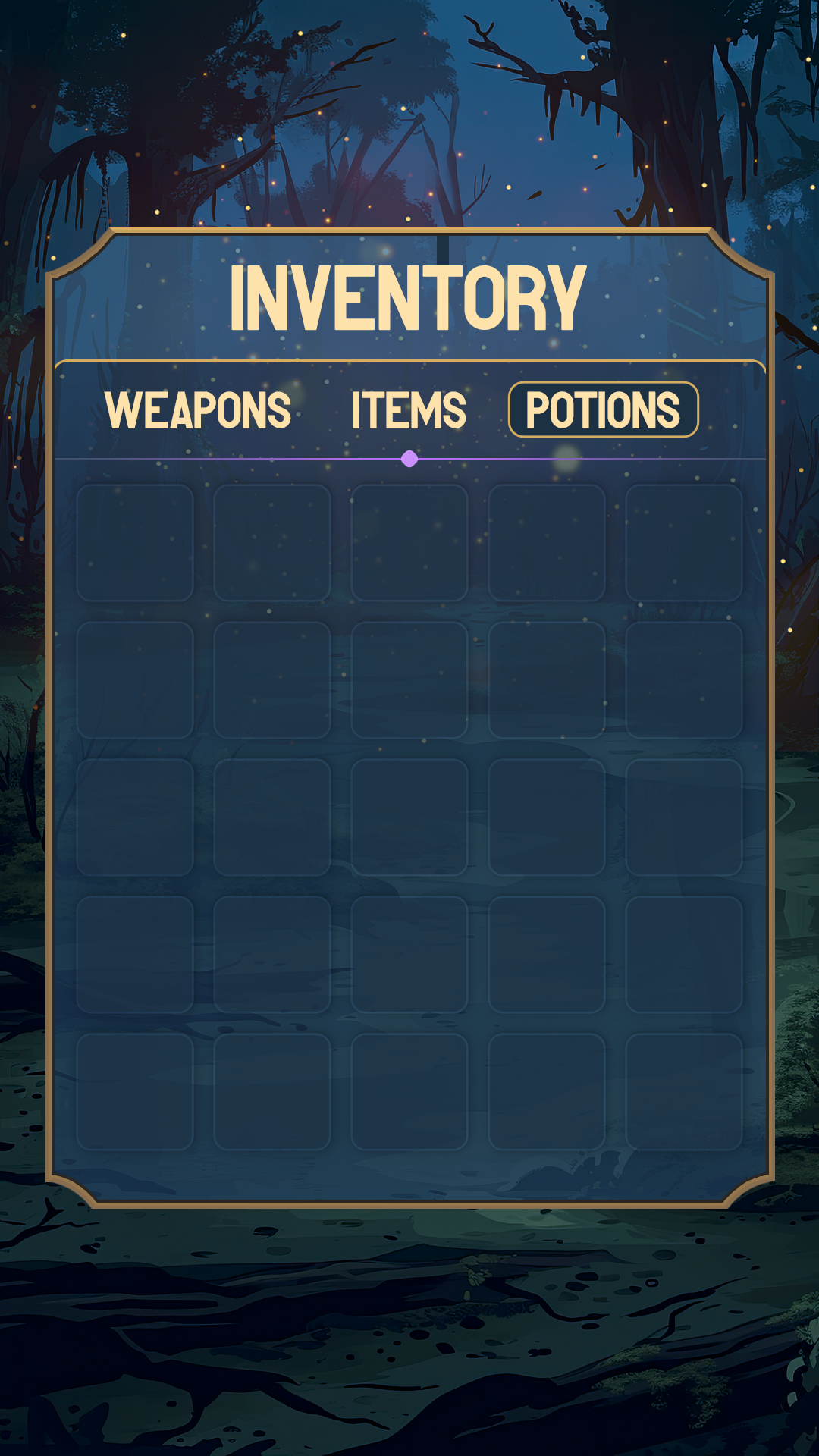

I started the practical aspect of this project by developing 5 fictional client briefs to provide myself with designs and general ideas to work toward. To incorporate a variety of designs, I made briefs for a logo, a social media post, an environmental poster, a game UI asset and the logotype for a company. For inspiration, I collected a series of pre-existing designs from the web that emulated my intended approach for each client brief.

I began sketching out ideas for each design and noting down which ideas could be made easier through the use of generative AI. A good example of this was for the social media post, where I needed to create an advertisement for a fictional drink brand called ‘Sipfinity’. I wanted to frame a mock-up of the drink on a beach next to a palm tree, however when I couldn’t find a suitable stock image, I marked this design as one that could be generated by AI. When considering how generative AI could assist my workflow, I was able to use generative expand to seamlessly extend the borders of images to help fit them into my designs.

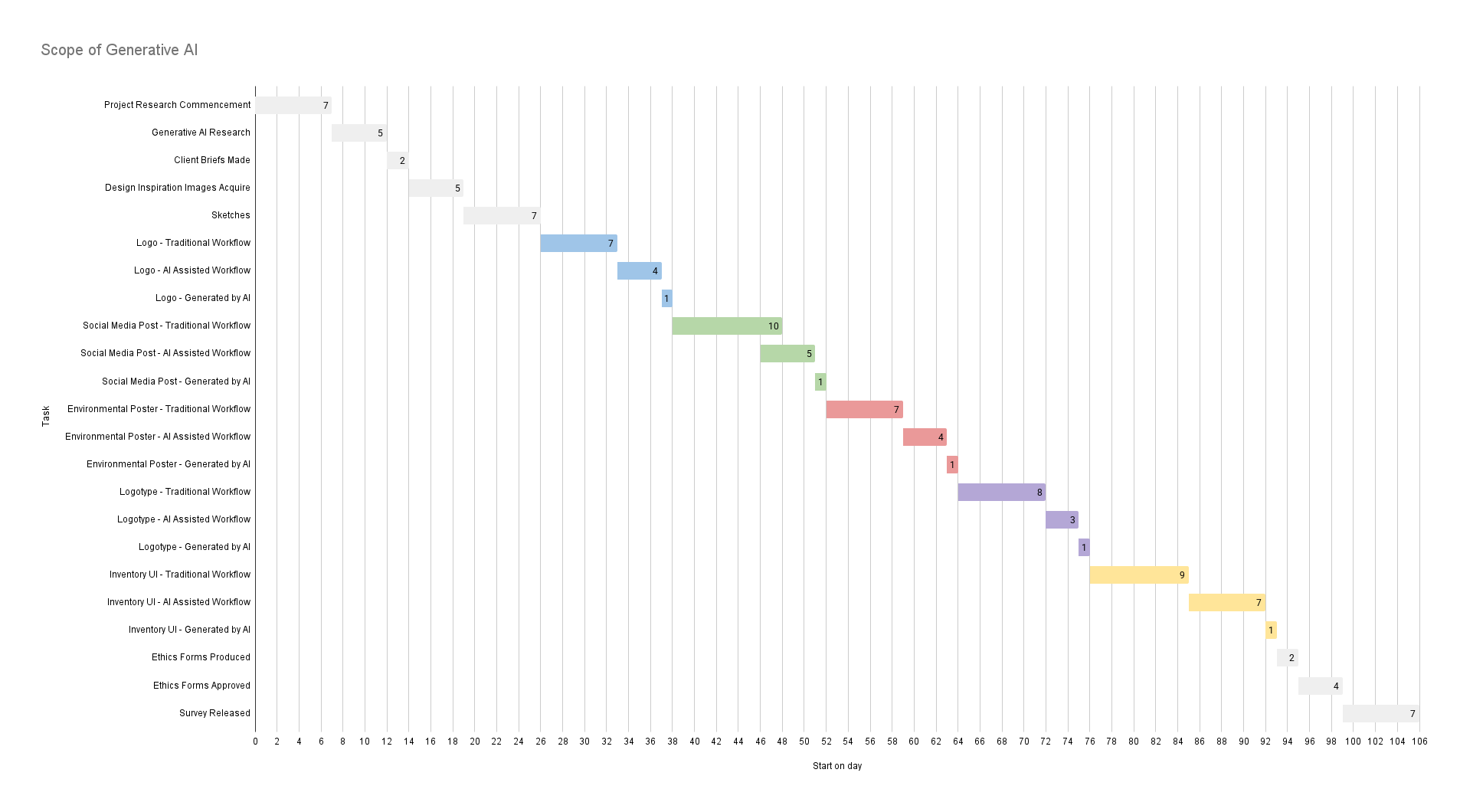

Throughout the project, I Utilised the Agile project methodology (developed in 2001). This workflow emphasizes flexibility over linear steps, meaning that different parts of the project can be worked on simultaneously, which proved especially beneficial for me in this project, as it required multiple designs to be created at the same time. In this same stance, this methodology was suited for adapting to change. This strengthened the project as I was able to continue to work on other aspects of the project whilst problem-solving when certain designs were taking longer than anticipated, thus allowing the project as a whole to continue despite unforeseen delays.

Creating day-by-day goals that split up each different client brief into smaller segments facilitated management of the project’s objectives. This made it far easier to track my progress and therefore monitor if I needed more time for certain parts of the project due to unpredictable setbacks. I also included contingency days so that, if I fell behind, I had time to catch up. Additionally, it was crucial to record the time taken for each design to be made in order to evaluate the effectiveness of each design workflow using a quantifiable measure.

When making the designs, I started with creating the content for each client brief using traditional design methods. This process started with ideation and sketching, where I quickly drew out basic ideas for each brief. For some of these ideas, I drew from common themes surrounding the companies I was creating the briefs for. The fictional brand for the logo project was an IoT company called Lumeo, so therefore the designs I sketched for this were to do with lighting and housing. For the social media post project, I was creating an advertisement for a drink brand called Sipfinity. Due to the nature of the tropical flavours, I instantly thought of centring the ad around a beach and using pastel colours. For every piece of content in this project, I primarily used Adobe Photoshop and Illustrator. I chose Photoshop as I have a lot of experience with the software, and it also has an integrated generative AI feature, allowing me to easily integrate gen AI into my workflow for the designs I created using an AI-assisted workflow.

On average, each design I made using traditional methods took around a week, from ideation to final version. For the next set of designs, I used generative AI to assist my workflow. This meant I could create images faster as I could produce specific assets I required or solve problems to do with content spacing instantly. The bulk of these designs were still created with my artistic direction, but while using AI as a tool to enhance my work, rather than replacing it. This method sped up my workflow, and I was able to typically create each of these designs in three to four days. Finally, I produced a set of designs solely using generative AI by typing in a specific prompt explaining each client brief and requesting an image to be generated. While these were created instantly, each design came with issues regarding quality, such as unwanted asymmetry, text-rendering problems, and an absence of editability.

In order to investigate which designs the general public preferred, I created a survey where users could choose which design from each client brief they liked the most. Surveyors were not informed beforehand of which designs were created by AI, and which were created by me. While the results of the survey would not provide a definitive answer on which workflow is the best to use, it would produce substantial data that designers can use to infer how AI is perceived in the industry, and how to adapt their work to suit it. To distribute the survey, I released it online to groups of university students, as well as groups with people of varying ages. This was to collect responses from a diverse group of people, to act as a microcosm for the general public as a whole. At the time of writing, the survey has 49 responses. Overall, either designs created with an AI-assisted workflow, or designs created using traditional methods had the majority of votes. No designs produced by generative AI had the most votes. This result supports my view that human design, using generative AI in an assistive capacity, is the best way to utilise this technology. Specifically, this data provides evidence that generative AI cannot replace the creativity of content that is made via human artistic direction and judgement. However, generative AI’s technical abilities are lowering the skill level for people to operate within the creative industry, making the market far more competitive. It is worth noting that there are other factors involved in determining whether or not generative AI can replace the job of a designer, such as the scope of the project, and the extent to which it is used without human intervention.

Throughout this project there were several small problems I needed to solve for the designs I wanted to create. A good example of this was for the AI-assisted Lumeo logo, where I wanted to emulate the glow of a lamp. Photoshop’s native glow effect is useful in some cases but lacks realism and depth for more detailed purposes. To overcome this, I experimented with using multiple layers of different colours, blended together with the linear dodge option. Another skill I learnt was product image manipulation. For my social media post, I wanted to superimpose a mock-up of the Sipfinity drink can onto a rock. To make this more realistic, I played around with shadows, highlights and iris blur to attain a look I was happy with.

Time management was paramount to this project’s success. Developing a comprehensive plan beforehand enabled me to easily track my progress and adapt my targets accordingly if delayed. If I were to do the project again, I would produce multiple AI-generated images using different AI models for each client-brief in order to diversify the options presented in the survey and strengthen the outcome.

Appendix 1 - Pear icon

https://www.flaticon.com/free-icon/pear_415767?term=fruit&page=1&position=20&origin=style&related_id=415767

Appendix 2 - Apple Icon

https://www.flaticon.com/free-icon/apple_415733?term=fruit&page=1&position=5&origin=style&related_id=415733

Appendix 3 - Grape Icon

https://www.flaticon.com/free-icon/grape_4057278?term=fruit&page=1&position=42&origin=style&related_id=4057278

Appendix 4 - Rock Texture

https://unsplash.com/photos/brown-boulder-on-water-FQkgtrVJJy4

Appendix 5 - Can Mockup

https://mockups-design.com/soda-can-mockup/#google_vignette

Appendix 6 - Inventory Background

https://pixabay.com/illustrations/swamp-nature-landscape-water-8521293/

Appendix 7 - Firefly Texture

https://www.freepik.com/free-photos-vectors/firefly-texture

Appendix 8 - Water Splashes Asset

https://www.freepik.com/free-vector/collection-realistic-water-splashes_5183143.htm#fromView=keyword&page=1&position=7&uuid=bdd31576-dd45-45a7-a42b-cd12d11e7eec&query=Transparent+Water+Splash+Png

Appendix 9 - Wood Texture

https://unsplash.com/photos/a-close-up-of-a-wooden-floor-with-a-black-background-CvgLy7yN-Fk

Appendix 10 - Coffee Mug

https://unsplash.com/photos/coffee-beans-on-white-ceramic-mug-beside-stainless-steel-spoon-LMzwJDu6hTE

Appendix 11 - Coffee Stain Brushes

https://www.brusheezy.com/brushes/57035-free-coffee-stain-photoshop-brushes

Appendix 12 - Coffee Jar

https://unsplash.com/photos/brown-liquid-in-white-ceramic-mug-ANj8BrYRmRU

Appendix 13 - Cartoon Background

https://unsplash.com/photos/KHHgCEN_HAw

Feuerriegel, S. et al. (2023) 'Generative AI,' Business & Information Systems Engineering, 66(1), pp. 118. Available at:

https://doi.org/10.1007/s12599-023-008347

Ian MacLean, Sciencia Consulting (2024) Exploring the Spectrum: A Comparative analysis of AI image tools. Available at:

https://scienciaconsulting.com/a-comparative-analysis-of-ai-image-tools/?utm_source=chatgpt.com

Robison, K. (2025) 'OpenAI rolls out image generation powered by GPT-4o to ChatGPT,' The Verge, 25 March. Available at:

https://www.theverge.com/openai/635118/chatgpt-sora-ai-image-generation-chatgpt?utm_source=chatgpt.com

Donelli, F. (2024) 'Generative AI and the creative industry: Finding balance between apologists and critics,' Medium, 17 November. Available at:

https://medium.com/%40fdonelli/generative-ai-and-the-creative-industry-finding-balance-between-apologists-and-critics-686f449862fc

Korotenko, K. (2023) 'How to spot AI-Generated Images,' Everypixel Journal - Your Guide to the Entangled World of AI, 14 November. Available at:

https://journal.everypixel.com/how-to-spot-ai-generated-images?utm_source=chatgpt.com